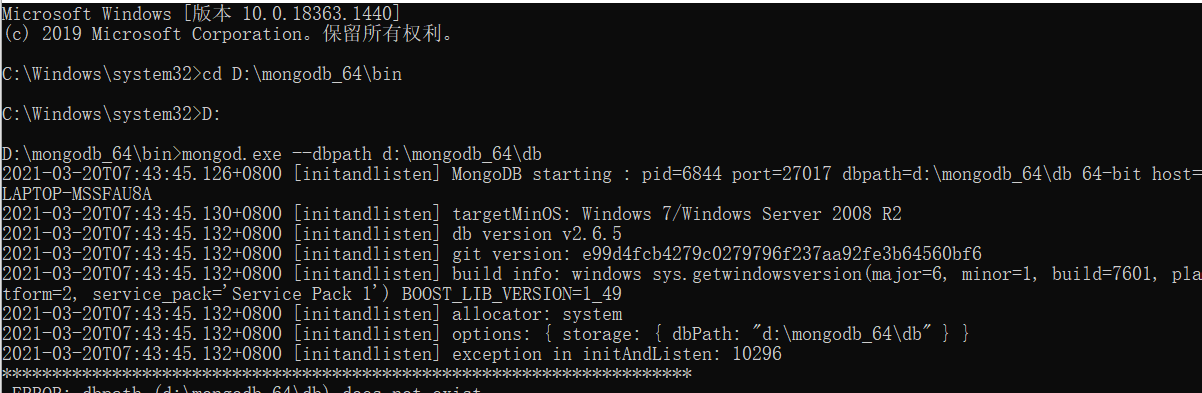

2021-03-20T07:43:45.126+0800 [initandlisten] MongoDB starting : pid=6844 port=27017 dbpath=d:\mongodb_64\db 64-bit host=LAPTOP-MSSFAU8A

2021-03-20T07:43:45.130+0800 [initandlisten] targetMinOS: Windows 7/Windows Server 2008 R2

2021-03-20T07:43:45.132+0800 [initandlisten] db version v2.6.5

2021-03-20T07:43:45.132+0800 [initandlisten] git version: e99d4fcb4279c0279796f237aa92fe3b64560bf6

2021-03-20T07:43:45.132+0800 [initandlisten] build info: windows sys.getwindowsversion(major=6, minor=1, build=7601, platform=2, service_pack='Service Pack 1') BOOST_LIB_VERSION=1_49

2021-03-20T07:43:45.132+0800 [initandlisten] allocator: system

2021-03-20T07:43:45.132+0800 [initandlisten] options: { storage: { dbPath: "d:\mongodb_64\db" } }

2021-03-20T07:43:45.132+0800 [initandlisten] exception in initAndListen: 10296

*********************************************************************

ERROR: dbpath (d:\mongodb_64\db) does not exist.

Create this directory or give existing directory in --dbpath.

See http://dochub.mongodb.org/core/startingandstoppingmongo

*********************************************************************

, terminating

2021-03-20T07:43:45.133+0800 [initandlisten] dbexit:

2021-03-20T07:43:45.133+0800 [initandlisten] shutdown: going to close listening sockets...

2021-03-20T07:43:45.133+0800 [initandlisten] shutdown: going to flush diaglog...

2021-03-20T07:43:45.133+0800 [initandlisten] shutdown: going to close sockets...

2021-03-20T07:43:45.133+0800 [initandlisten] shutdown: waiting for fs preallocator...

2021-03-20T07:43:45.133+0800 [initandlisten] shutdown: lock for final commit...

2021-03-20T07:43:45.133+0800 [initandlisten] shutdown: final commit...

2021-03-20T07:43:45.134+0800 [initandlisten] shutdown: closing all files...

2021-03-20T07:43:45.135+0800 [initandlisten] closeAllFiles() finished

2021-03-20T07:43:45.135+0800 [initandlisten] dbexit: really exiting now

老师请问一下,为什么我这里是连接失败,我的命令都和老师输入的一样