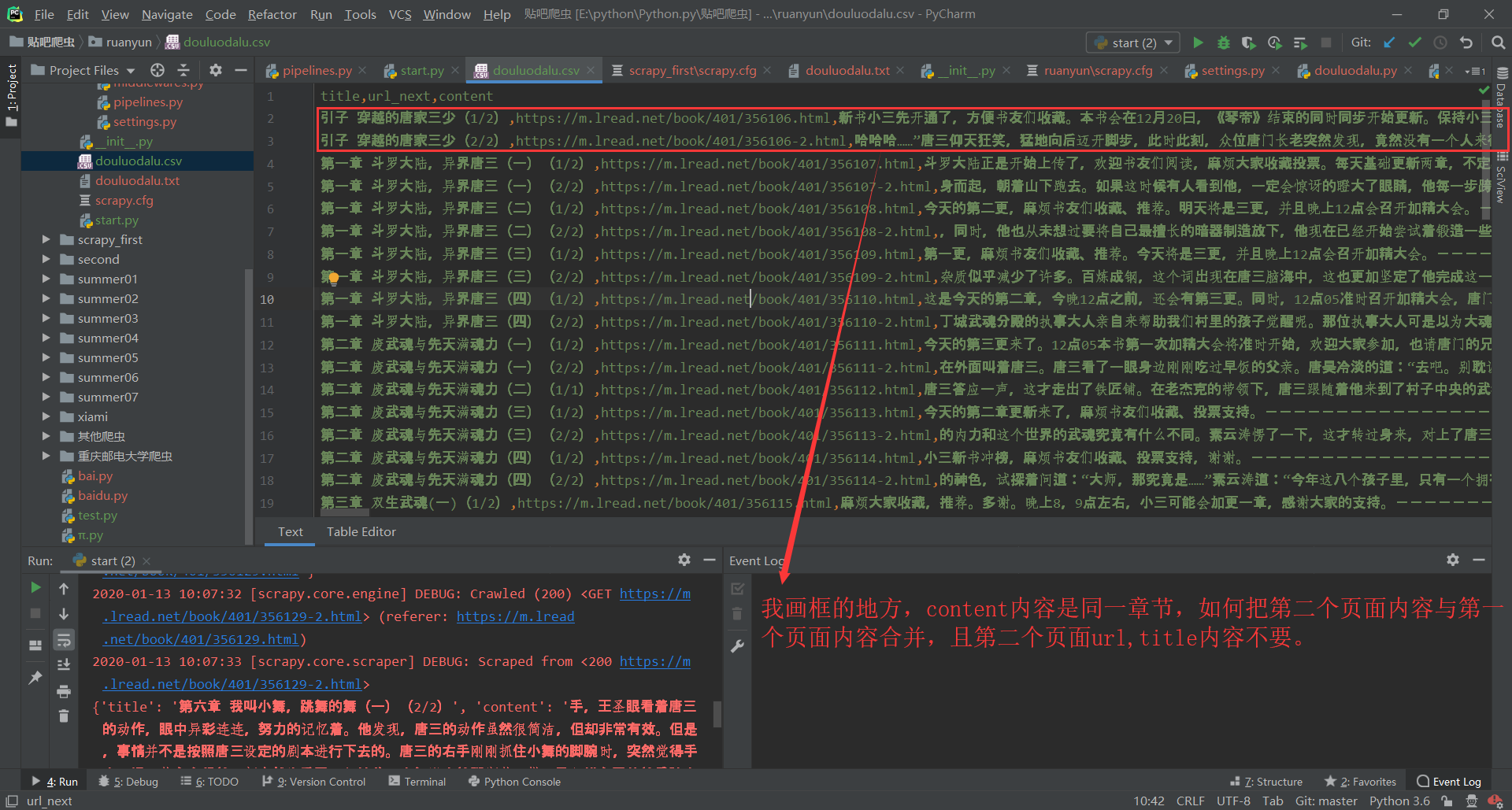

我准备爬取https://m.lread.net的一本小说,这小说有时候 一个章节分了两页html。我如何将第二页的内容与前一页内容合并,保存到csv文件里?

#pipelines.py

import csv

class RuanyunPipeline(object):

def __init__(self):

self.filename= open('douluodalu.csv','w',newline="",encoding='utf-8')

self.writer=csv.writer(self.filename)

self.writer.writerow(['title','url_next','content'])

def process_item(self, item, spider):

if '-' not in item['url_now']:

self.writer.writerow([item['title'],item['url_now'],item['content']])

else :

self.writer.writerow([item['title'],item['url_now'],item['content']])

return item

def close_spider(self,spider):

if self.filename:

self.filename.close()